Introduction

The rise of artificial intelligence in code generation and problem-solving has transformed the way we approach both technical and creative tasks. From automating repetitive coding chores to providing insights into complex problem domains, AI tools have become essential companions for developers, researchers, and learners alike. Among the many AI models available today, two have stood out in my personal experience: OpenAI’s GPT and xAI’s Grok. Each offers unique strengths that cater to different needs, and in this article, I’ll dive deep into my firsthand experiences with both, exploring their capabilities, limitations, and the contexts in which they shine. Whether you’re a programmer tackling intricate algorithms or a researcher sifting through vast amounts of data, understanding these tools can significantly enhance your workflow.

The journey of AI in problem-solving is a fascinating one. Over the past decade, we’ve seen models evolve from simple rule-based systems to sophisticated neural networks capable of understanding context and generating human-like responses. GPT and Grok represent two distinct approaches within this landscape, GPT as a precision-driven specialist and Grok as a versatile generalist. My goal here is to provide a detailed comparison based on real-world use cases, offering insights that can help you decide which tool might best suit your needs.

GPT: A Specialist in Precision

When it comes to solving specific, technical problems, be it in mathematics, logic, or coding, GPT has consistently proven itself to be a powerhouse. Its ability to dissect complex queries and deliver accurate, step-by-step solutions is remarkable. One memorable instance occurred while I was working on a Bayesian inference problem involving posterior distributions for a noisy dataset. Feeling stuck, I turned to Maths GPT, a specialised variant of GPT designed for mathematical challenges. After inputting the problem, I received not only a clear solution but also an explanation of the underlying concepts, such as prior distributions, likelihoods, and normalization, in a way that connected my textbook knowledge with practical application. This wasn’t a one-off; GPT has repeatedly demonstrated its ability for precision across various technical domains.

Another example that stands out is from a coding project where I needed to optimise a merge sort algorithm for a large dataset. I asked GPT to explain the algorithm’s mechanics and suggest improvements. It responded with a detailed breakdown of the divide-and-conquer approach, complete with pseudocode and a discussion on reducing time complexity from O(n log n) in worst-case scenarios. It even provided a Python implementation tailored to my dataset’s characteristics, which I was able to integrate directly into my project. This level of specificity and reliability makes GPT a valuable tool for anyone working on focused, technical tasks.

The GPTs store further enhances this experience by offering specialised models like o4-mini-high, which I’ve found excels at handling highly specific queries with minimal resistance or hallucination. This customisation allows users to tailor the AI to their exact needs, whether it’s debugging code, solving equations, or analysing data structures.

xAI’s Grok: A Generalist’s Dream

In contrast to GPT’s laser-focused precision, xAI’s Grok offers a broader, more versatile approach that’s particularly valuable for tasks like writing, research, and creative exploration, at least from my experience. Its standout feature, DeepSearch, has been an interesting feature for me, especially in academic and professional settings. Take, for instance, I want to know about recent research papers on the ethical implications of AI in healthcare. I needed credible sources that covered both the benefits, like improved diagnostics and the risks, such as bias in decision-making algorithms. Using Grok’s DeepSearch, I quickly obtained a curated list of academic papers, industry reports, and ethical guidelines. The difference in perspectives and the quality of the references saved me hours of manual searching, enabling me to divert my time and attention to reading and understanding more about AI in healthcare.

Grok’s functionality doesn’t stop at research, of course. Its versatility shines in creative tasks as well. When I tasked it with drafting an outline for a blog post about AI’s future, Grok not only structured the piece logically but also suggested imaginative angles, like AI’s potential role in space exploration, that I hadn’t considered. Similarly, in a side project where I explored storytelling, Grok helped me craft a short sci-fi narrative, crafting together plot points and character motivations with surprising coherence. This adaptability makes it a fantastic tool for users who need an AI that can pivot across different types of work.

For more complex research, the DeeperSearch option takes things a step further. I once used it to investigate the intersection of quantum computing and machine learning, a niche topic with sparse resources, especially for the uninitiated about the two topics. After giving Grok extra time to process, it returned a detailed summary backed by references to cutting-edge papers and technical blogs. This depth of exploration is evidence towards Grok’s ability to handle multifaceted, open-ended tasks.

Limitations and Challenges

Despite their perks and features, both GPT and Grok have their share of limitations. GPT’s strength in precision can sometimes also be its downfall. Its responses, while accurate, can feel overly rigid or formulaic, particularly when creativity is required, making it “inorganic-sounding”. For example, when I asked GPT to brainstorm ideas for a creative coding project, maybe let’s say, an interactive art installation. Its suggestions were technically sound but lacked the tone and uniqueness of what someone would write. Similarly, a request for a poem about AI yielded a structurally perfect piece that felt mechanical, missing the emotional aspect I’d hoped for. In scenarios where a broader, more imaginative approach is needed, GPT can fall short.

On the other hand, Grok trades some of GPT’s precision for its versatility, which occasionally leads to hiccups or hallucinations. One notable instance was when I asked Grok to explain Dijkstra’s algorithm for shortest-path finding. While the explanation was engaging and easy to follow, it misstated the algorithm’s time complexity as O(V^2) in all cases, overlooking optimisations like priority queues that reduce it to O((V + E) log V). This “hallucination” is a tendency to generate plausible but incorrect details. Which meant I had to double-check its output, slightly weakening its reliability for quick, technical answers. Additionally, in another case, a coding query that resulted in a near-correct solution that required additional tweaking further shows that Grok may not always be the best fit for pinpoint accuracy.

Illustrating Examples

To further highlight the differences between GPT and Grok, I’ve included two specific use cases: a hard-coding problem and a research problem about Retrieval-Augmented Generation (RAG). Below, you’ll find the prompts for each, along with a brief overview of how each AI might approach the task based on its strengths. For note, for the models that were used for the Hard Coding Problem for my GPT, I use the ChatGPT o4-mini-high model, and for Grok, I used model 3 with the Think functionality. For the Research Problem on RAG, for ChatGPT I used the same model but using the Deep Research function, and for Grok I used the DeeperSearch function.

Hard Coding Problem

Prompt:

“Explain and provide a solution for the following problem: Given a list of integers, find the length of the longest subsequence that is first increasing and then decreasing. For example, in the list [1, 11, 2, 10, 4, 5, 2, 1], the longest such subsequence is [1, 2, 10, 2, 1] with length 5.”

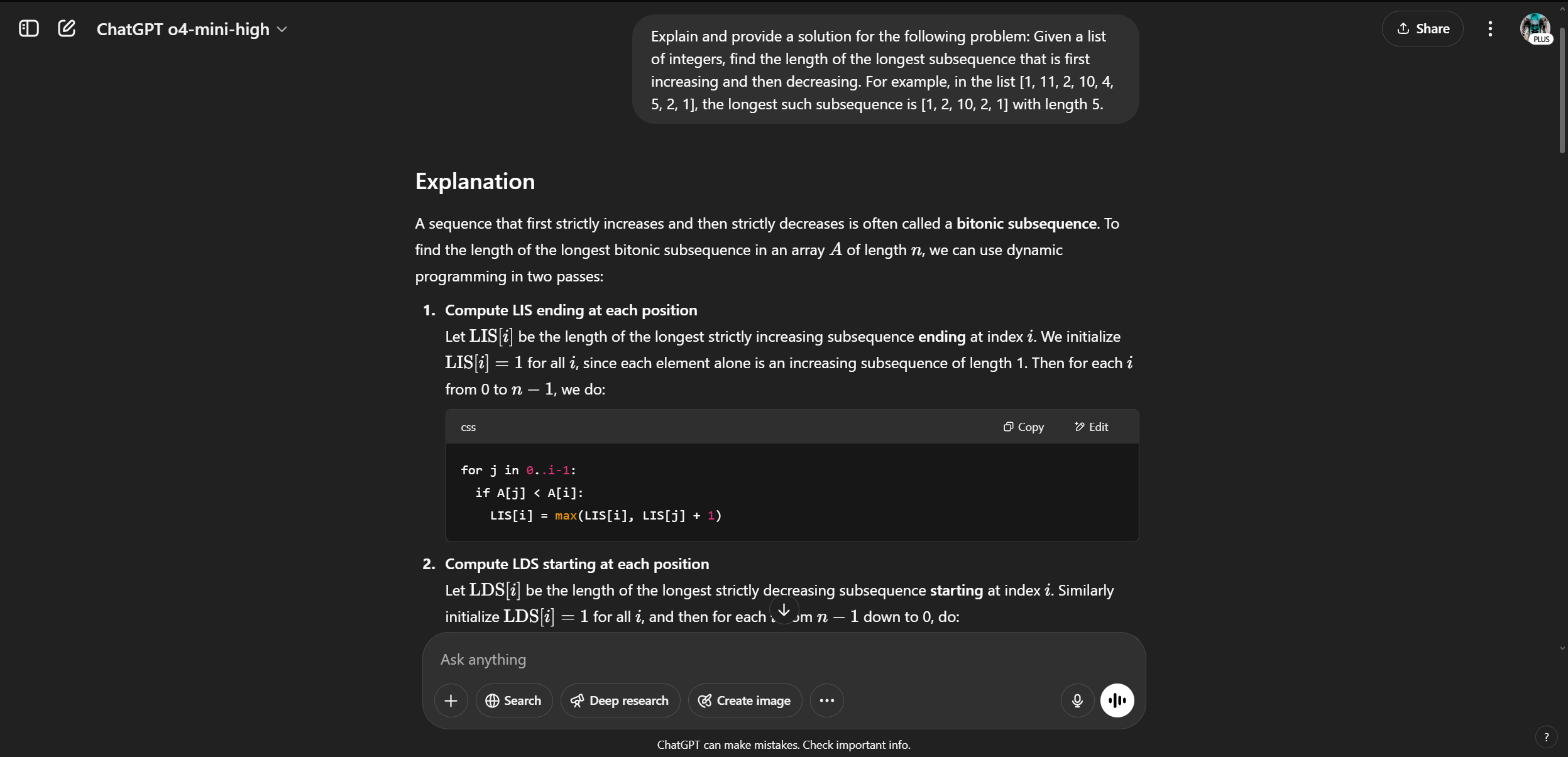

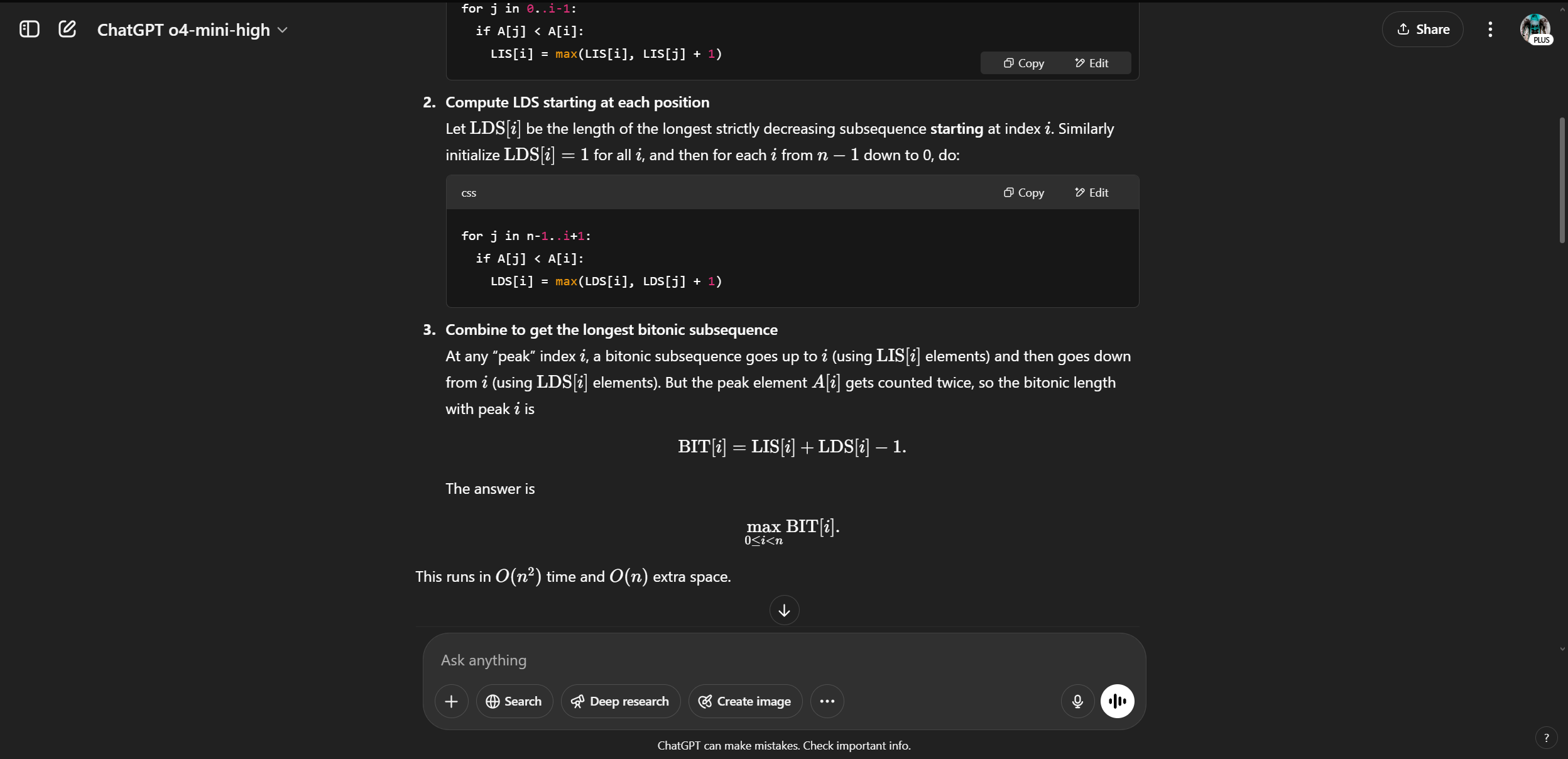

- ChatGPT’s Response (was almost instant):

… (skipping the prompt until the end because the results are too long.)

…

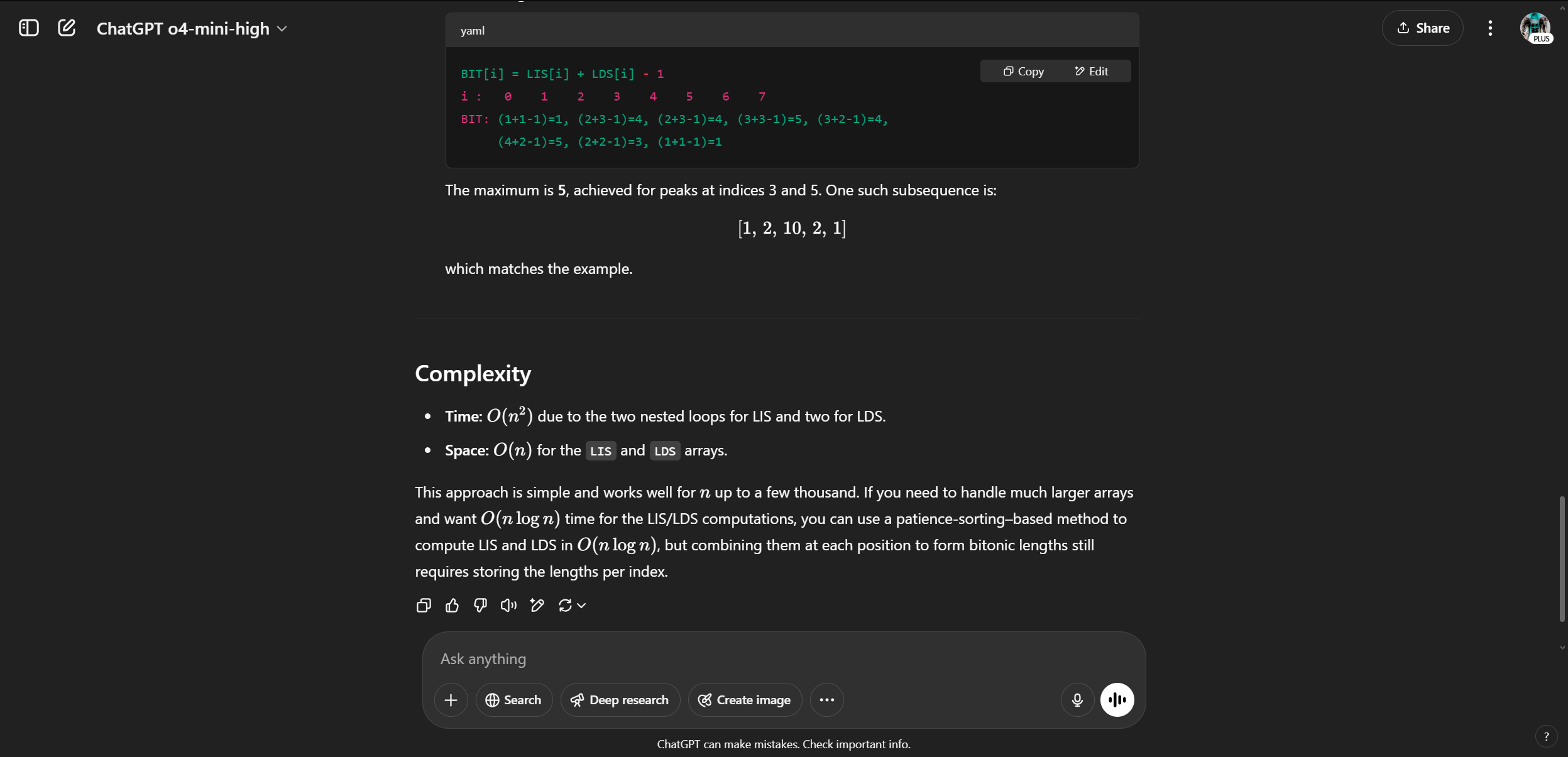

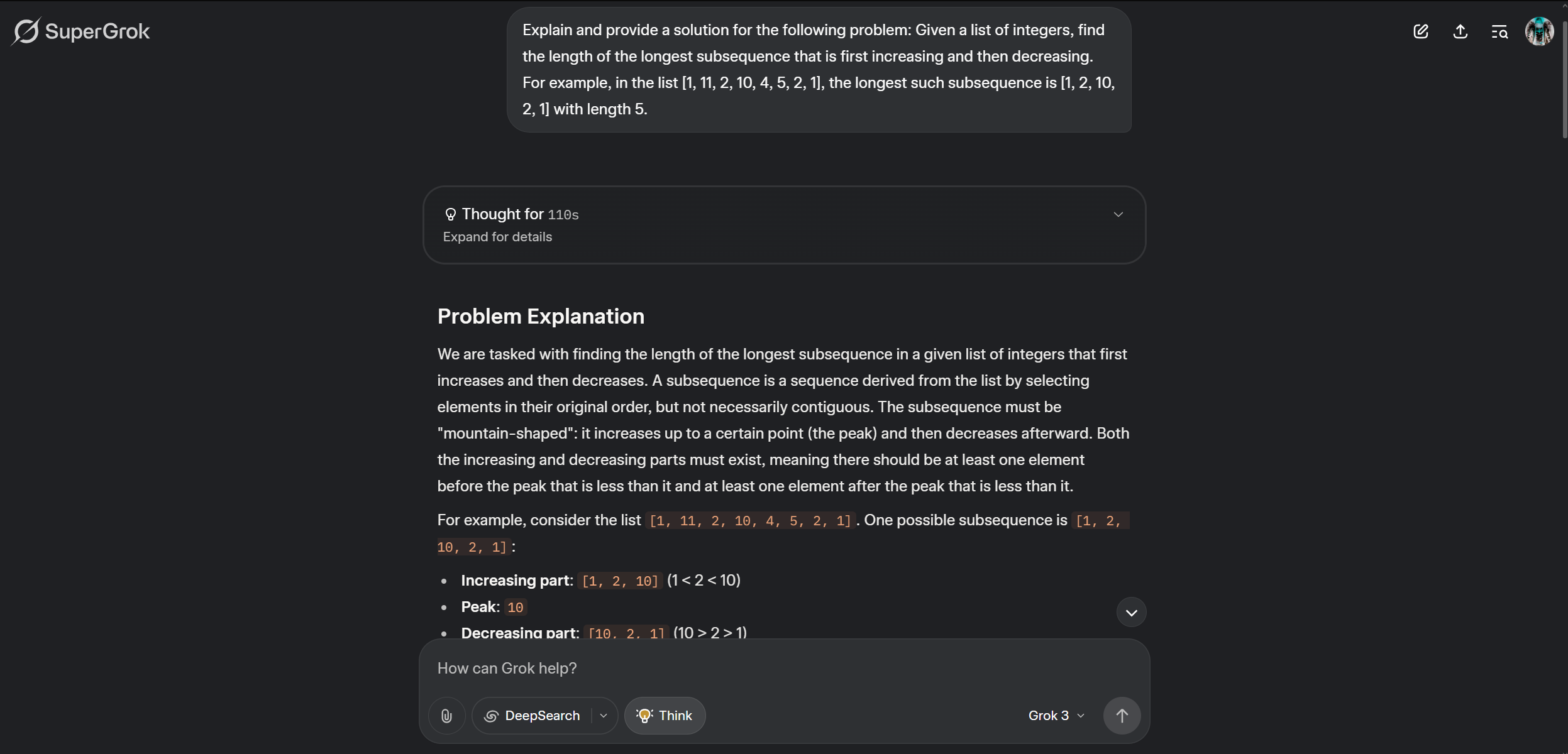

- Grok xAI’s Response (Took 110 seconds):

… (skipping the prompt until the end because the results are too long.)

…

Results overview

Problem Statement

Find the length of the longest subsequence in a list of integers that first increases and then decreases. Example list: [1, 11, 2, 10, 4, 5, 2, 1], with the stated longest subsequence as [1, 2, 10, 2, 1] (length 5).

GPT Results

- Approach: Dynamic programming with LIS (Longest Increasing Subsequence) and LDS (Longest Decreasing Subsequence) arrays, combining them as LIS[i] + LDS[i] – 1 for each peak i.

- Output: Code outputs 6 (e.g., [1, 2, 10, 4, 2, 1]), but walkthrough claims 5 due to an LDS error (corrected LDS should be [1, 5, 2, 4, 3, 3, 2, 1]).

- Strengths: Detailed explanation, correct Python implementation, O(n²) time complexity.

- Weakness: Walkthrough contains an LDS computation error, though code is accurate.

Grok Results

- Approach: Similar dynamic programming, with an added condition that LIS[i] >= 2 and LDS[i] >= 2 for non-trivial parts.

- Output: Correctly outputs 6 (e.g., [1, 11, 10, 5, 2, 1]), notes discrepancy with example’s length of 5.

- Strengths: Accurate computation, highlights example inconsistency, robust C++ implementation.

- Weakness: Explanation less detailed than GPT’s.

Result conclusion

GPT outperforms Grok slightly due to its comprehensive explanation and accessible Python code, with a much faster time as well, despite a minor walkthrough error. Both solutions correctly compute a length of 6, suggesting the example’s length of 5 may be a typo. GPT’s clarity makes it better for technical problem-solving and education.

Research Problem on RAG

Prompt:

“Discuss a technical deep dive for Retrieval-Augmented Generation (RAG) model. What are its key components, and how does it differ from traditional language models? Provide insights into its advantages and potential limitations.”

- ChatGPT’s Response: (took 5 minutes)

… (skipping the prompt until the end because the results are too long.)

…

- Grok xAI’s Response (took 1 minutes 41 seconds):

… (skipping the prompt until the end because the results are too long.)

…

Results overview

After evaluating the responses from ChatGPT and Grok to the prompt requesting a technical deep dive into the Retrieval-Augmented Generation (RAG) model with a focus on finance. Grok’s result is shown to be better due to its concise, structured, and finance-tailored approach. ChatGPT delivers an exhaustive explanation, detailing RAG’s components (encoder, retriever, generator), differences from traditional LLMs, and finance applications like document summarisation and compliance monitoring, but its extensive explanation risks overwhelming readers with excessive technical depth. Conversely, Grok efficiently outlines RAG’s core components, which are retrieval, generation, and augmentation, in a clear, digestible format. Directly addressing finance-specific use cases such as real-time customer service and risk assessment. It also highlights critical sector challenges like latency and regulatory compliance, integrates citations seamlessly for credibility, and concludes with a forward-looking perspective on RAG’s potential in finance. This combination of clarity, relevance, and actionable insights makes Grok’s response more effective for finance professionals seeking a practical, focused understanding of RAG’s impact, outshining ChatGPT’s broader but less targeted detail. Additionally, there’s the time aspect as well where ChatGPT took 5 minutes to complete its search but Grok only took 1 minute and 41 seconds, further emphasising Grok’s superiority in this regard.

User Experience

The user experience of both tools reflects their design philosophies, at least from how I see it. GPT’s interface is minimalist and intuitive, with a clean layout that makes it easy to input prompts and receive concise responses. The GPTs store adds a layer of convenience, letting me switch between models, like from a general-purpose GPT to Maths GPT, in seconds. This streamlined approach is perfect for users who value efficiency and simplicity.

Grok’s interface, in contrast, is more feature-rich, integrating DeepSearch seamlessly into the workflow. This is an asset for research-heavy tasks, as it combines AI-generated content with web-sourced information in one place. However, the abundance of options can feel overwhelming at times, especially for users who prefer a stripped-down experience. Speed-wise, GPT tends to beat Grok for quick queries, while Grok’s deeper research capabilities come at the cost of slightly longer processing times, particularly with DeeperSearch.

Conclusion

Reflecting on my experiences, GPT and Grok each have their own pros and cons in the AI landscape. GPT is the best for precision-driven tasks, like solving equations, debugging code, or analysing algorithms. Its specialised models and reliable outputs make it a go-to for technical professionals and students. Grok, on the other hand, works well as a versatile all-rounder, excelling in research, writing, and creative tasks thanks to features like DeepSearch. Its ability to handle diverse tasks has been proven to function well, better than ChatGPT.

Choosing between them comes down to your specific needs. If you’re tackling a coding problem or a math puzzle, GPT is definitely the best. If you’re drafting a paper, brainstorming ideas, or diving into a research maze, Grok has the edge. Both tools have improved my work in their own ways, and as AI technology advances, I’m excited to see how they’ll evolve, perhaps even fixing their current gaps to become even more valuable as a tool.

Final Thoughts

The rapid evolution of AI promises a future where tools like GPT and Grok will only grow more capable. My time with them has been a bag of interesting discoveries, revealing just how much potential lies in these technologies. Whether helping to solve tricky problems or exploring unexplored ideas, they’ve already reshaped how I approach my work, and I suspect they’ll do the same for you.